Visual manipulation

Scene understanding

Old neighbor

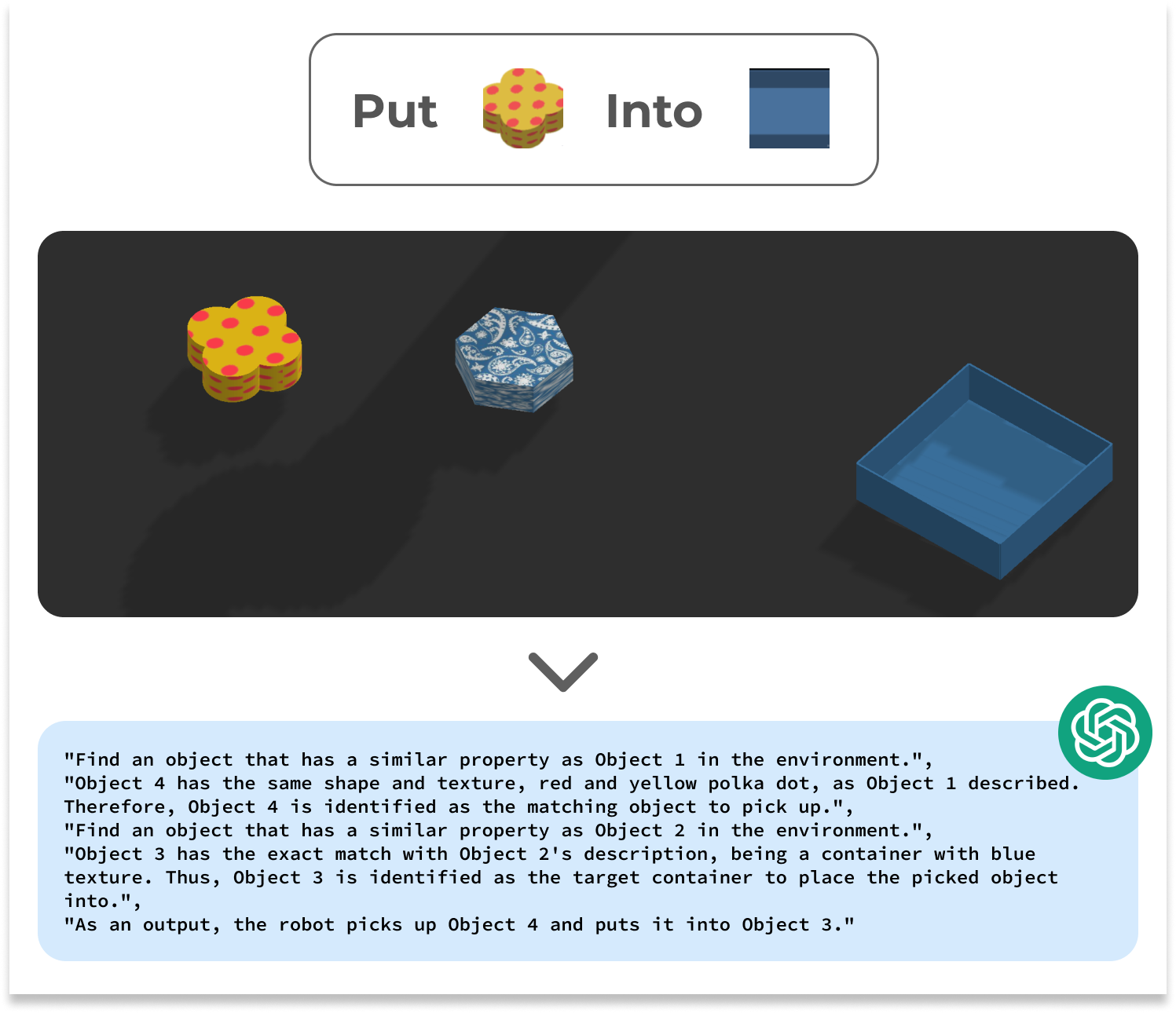

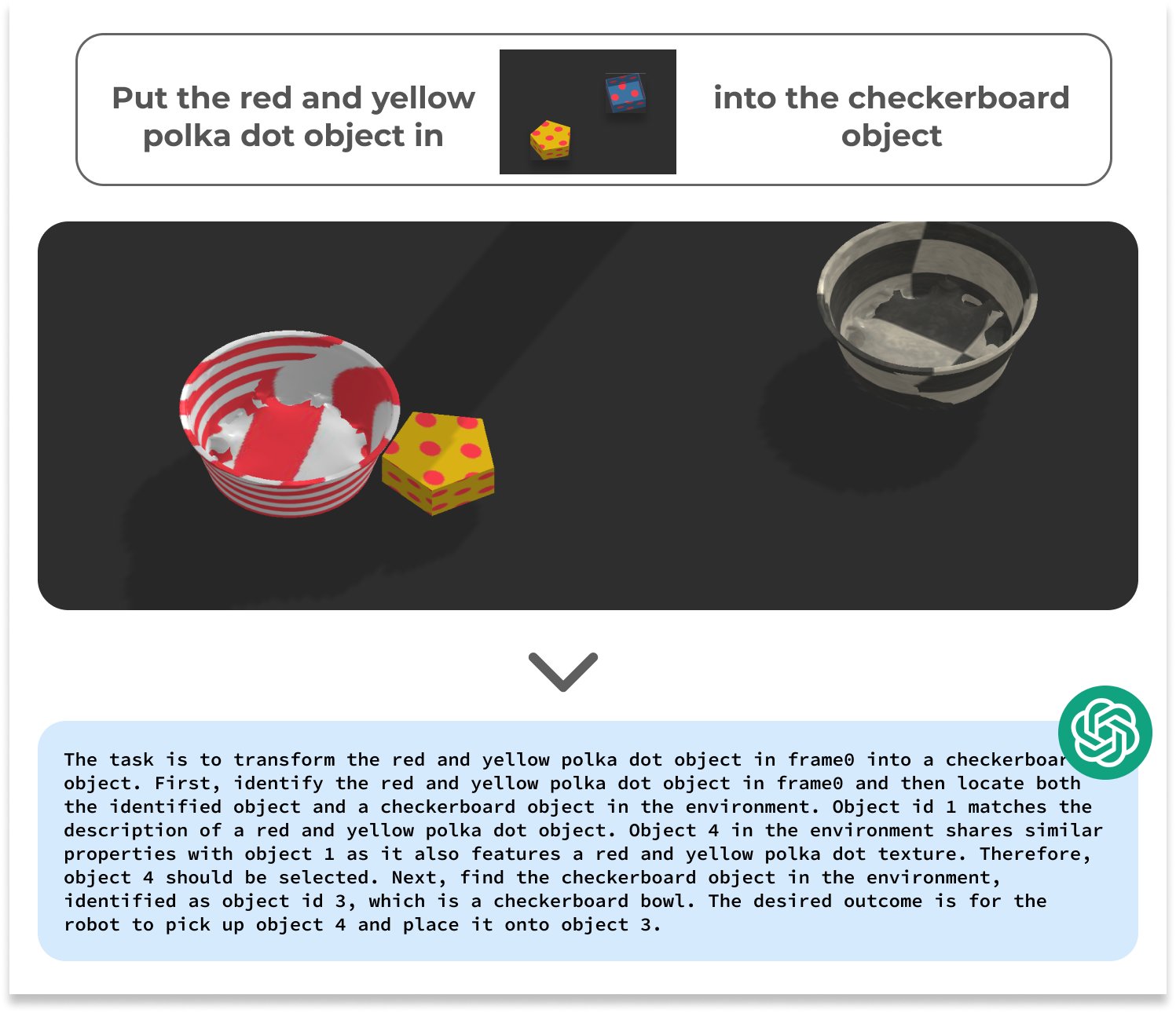

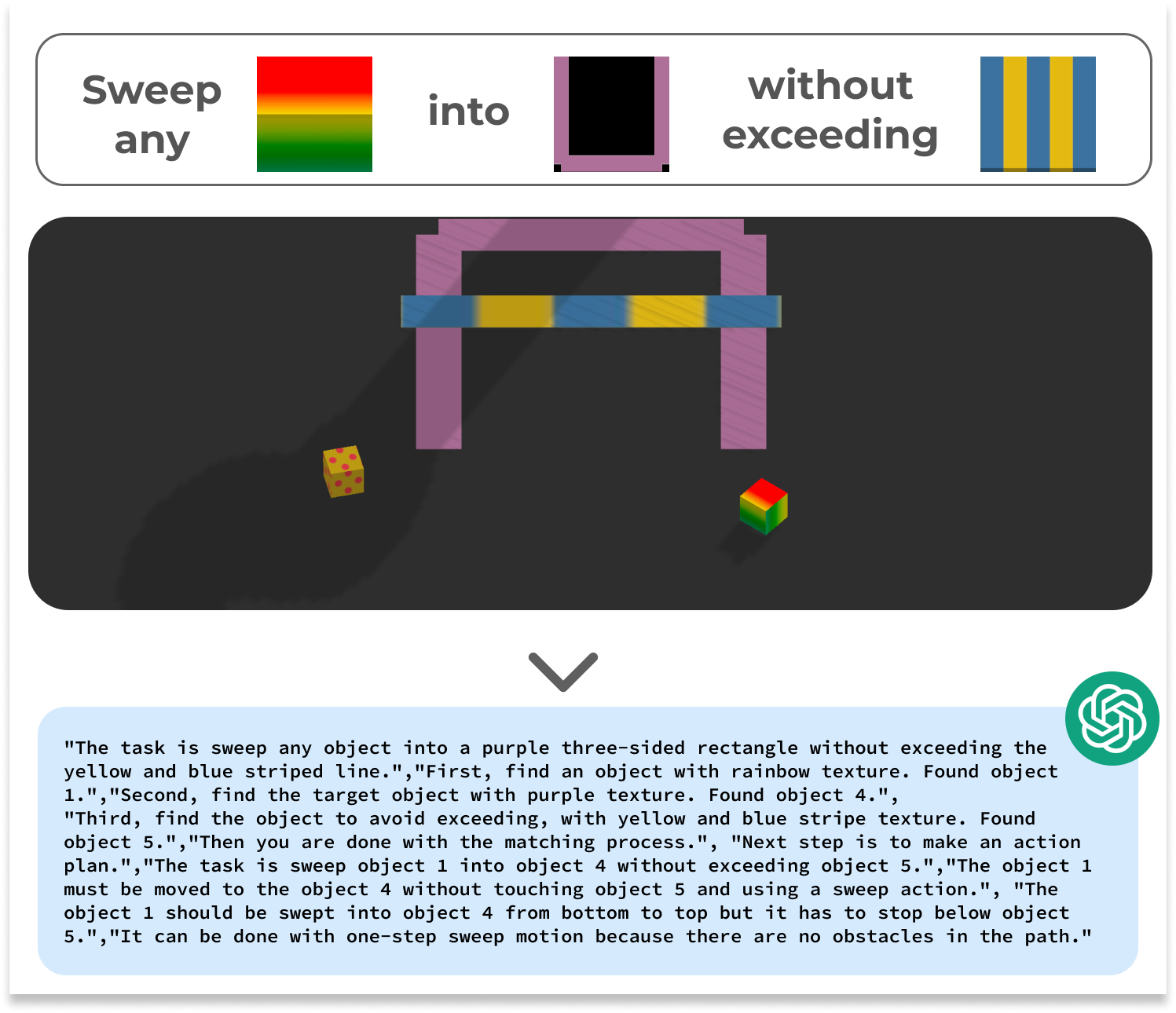

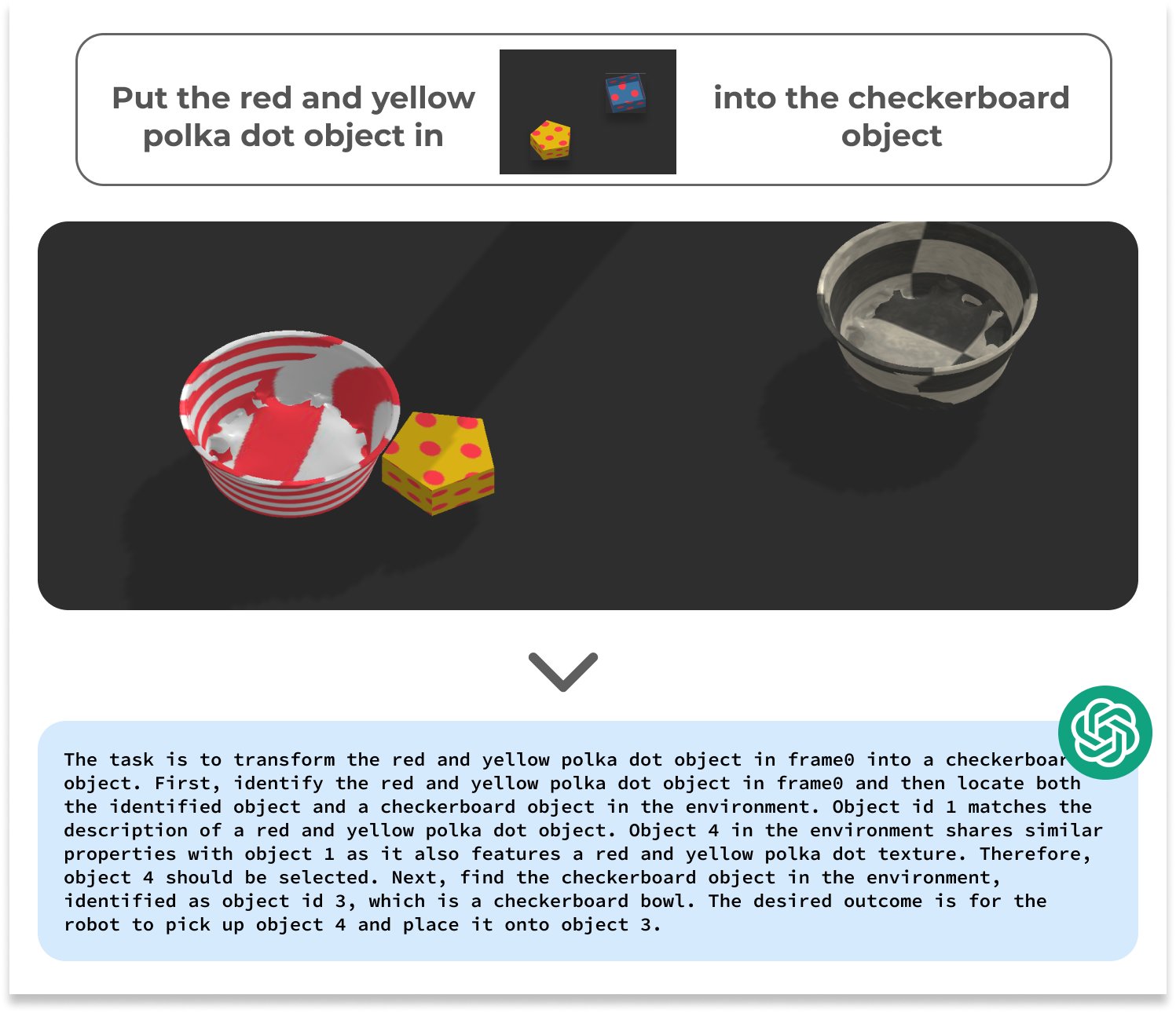

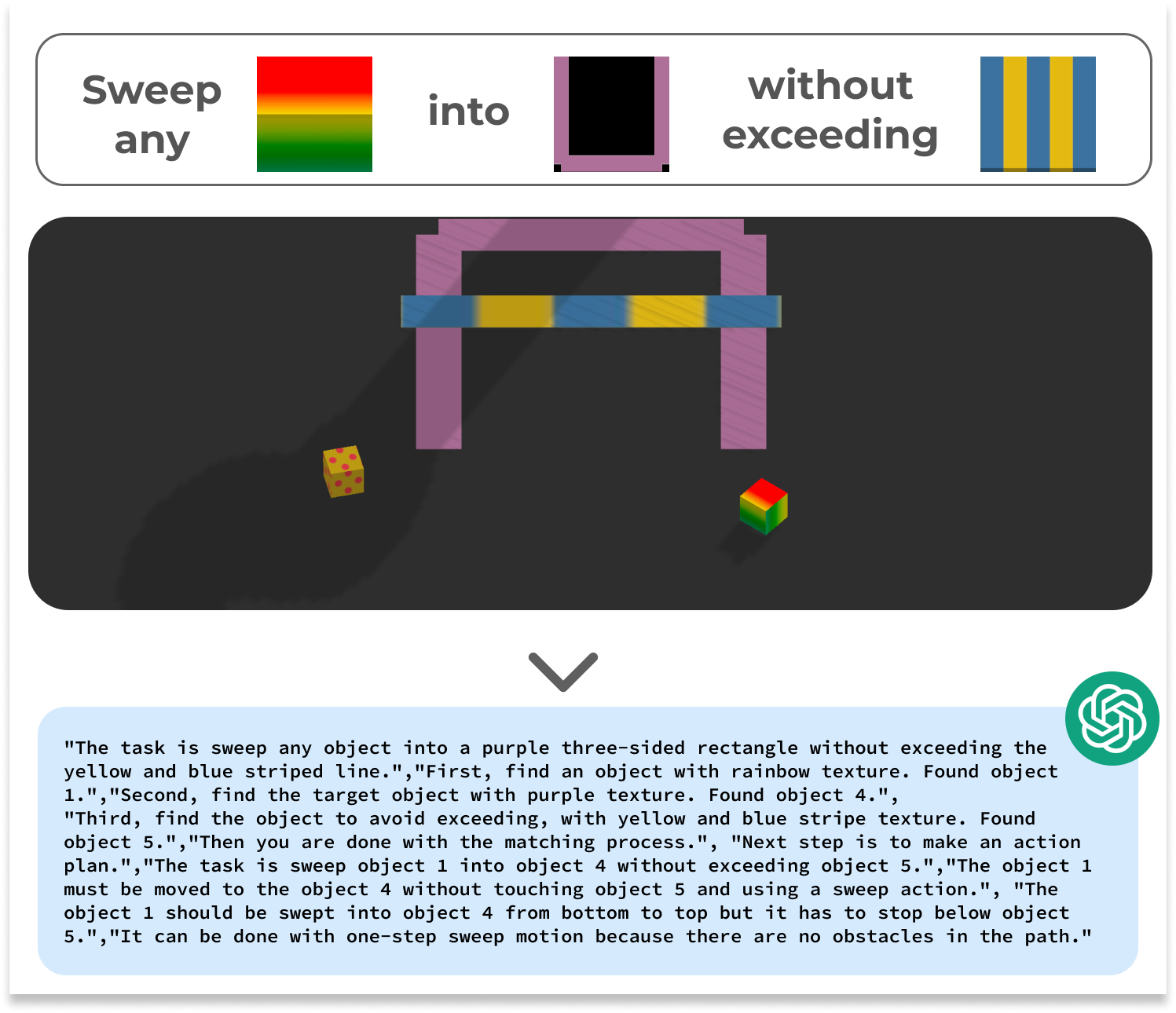

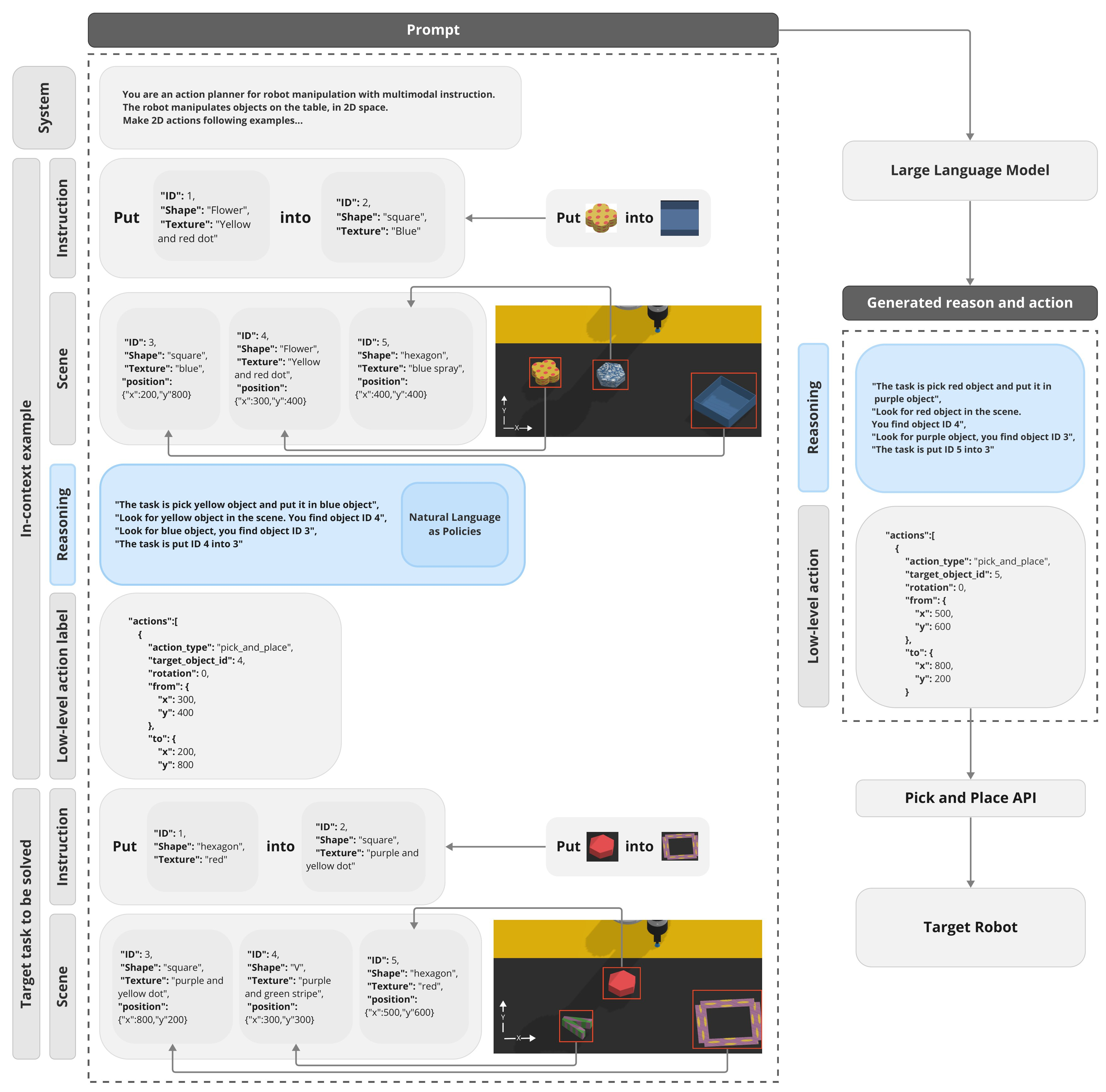

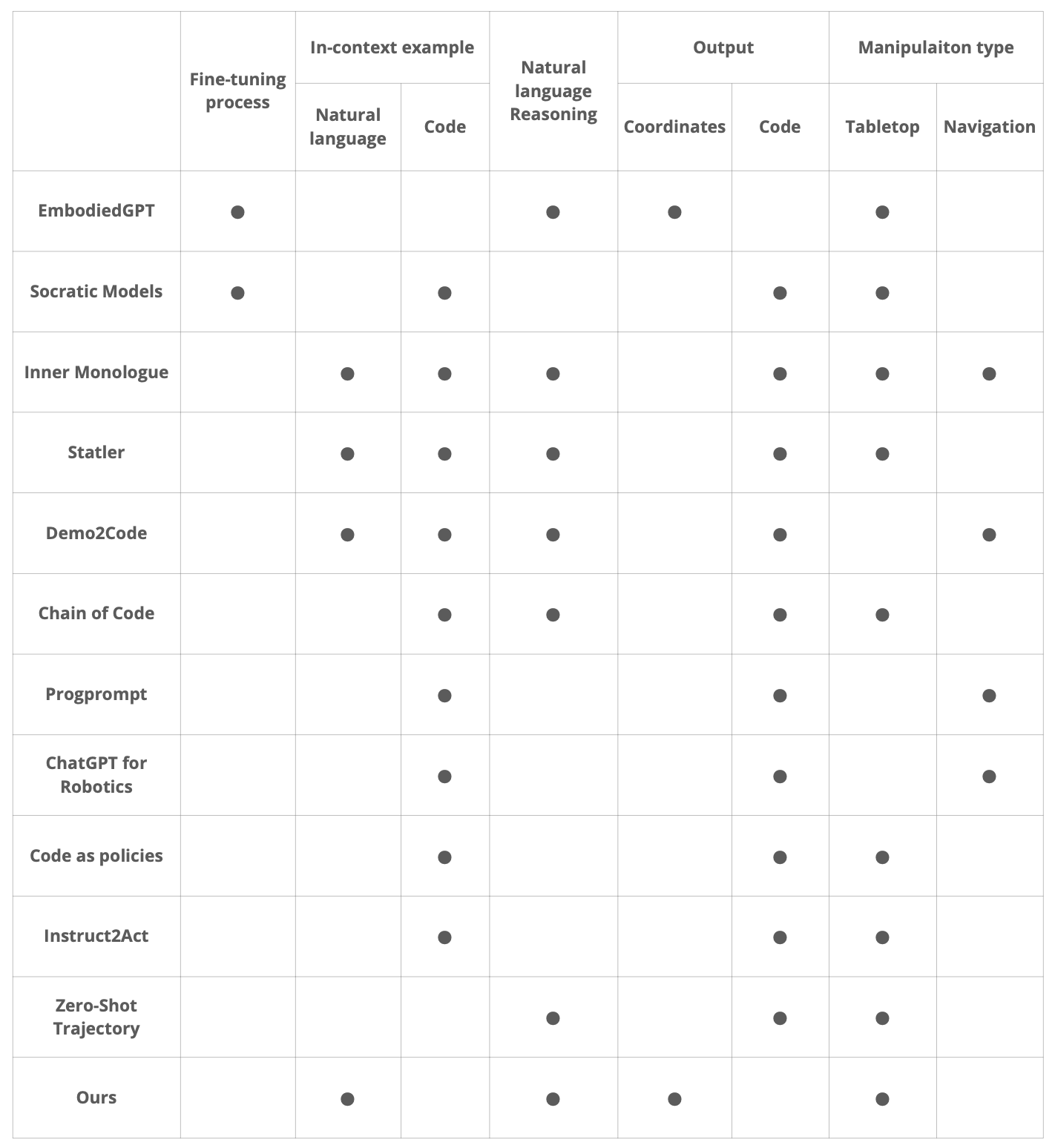

We demonstrate experimental results with LLMs that address robotics action planning problems. Recently, LLMs have been applied in robotics action planning, particularly using a code generation approach that converts complex high-level instructions into mid-level policy codes. In contrast, our approach acquires text descriptions of the task and scene objects, then formulates action planning through natural language reasoning, and outputs coordinate level control commands, thus reducing the necessity for intermediate representation code as policies. Our approach is evaluated on a multi-modal prompt simulation benchmark, demonstrating that our prompt engineering experiments with natural language reasoning significantly enhance success rates compared to its absence. Furthermore, our approach illustrates the potential for natural language descriptions to transfer robotics skills from known tasks to previously unseen tasks.

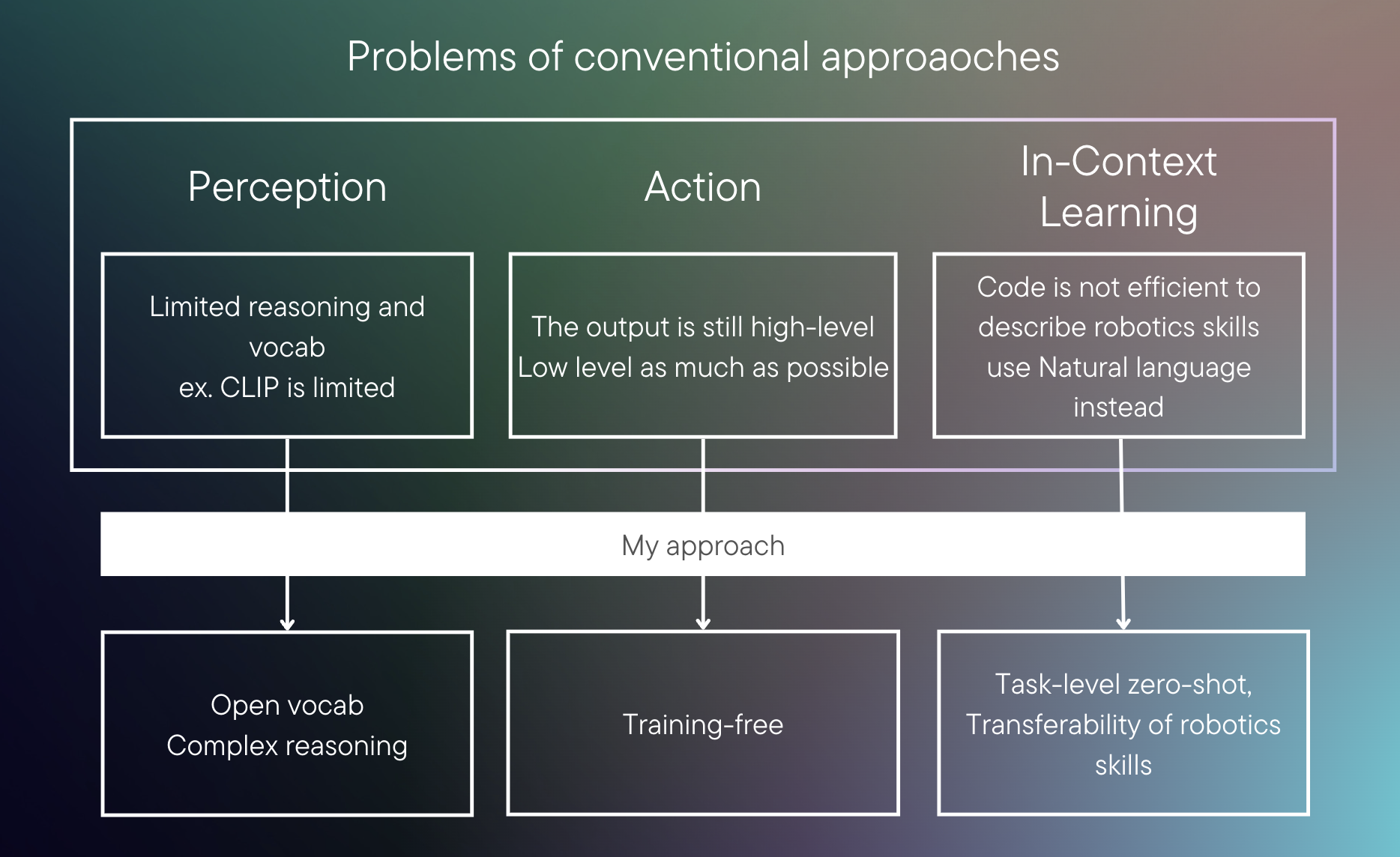

In the recent LLM-based code generation approaches, we suppose there are three primal limitations. First, code implementation itself lacks high-level contextual meaning to efficiently describe embodied skills since usually it is symbolized, indirectly connected to the scene, and abstracted in the in-context learning process. Second, these approaches are limited by task-specific pre-defined APIs, such as CLIP. Utilizing high-level APIs in a perception module implies a limitation in flexibility, as it suggests an inability to perform tasks beyond that scope. Third, LLM output actions are policy codes with high-level pre-defined APIs, that is task-level, and prevent flexible planning in various environments and optimizing instance-level planning. We hypothesize that the natural language description of the whole planning process, instead of code, can contribute to removing the limitations.

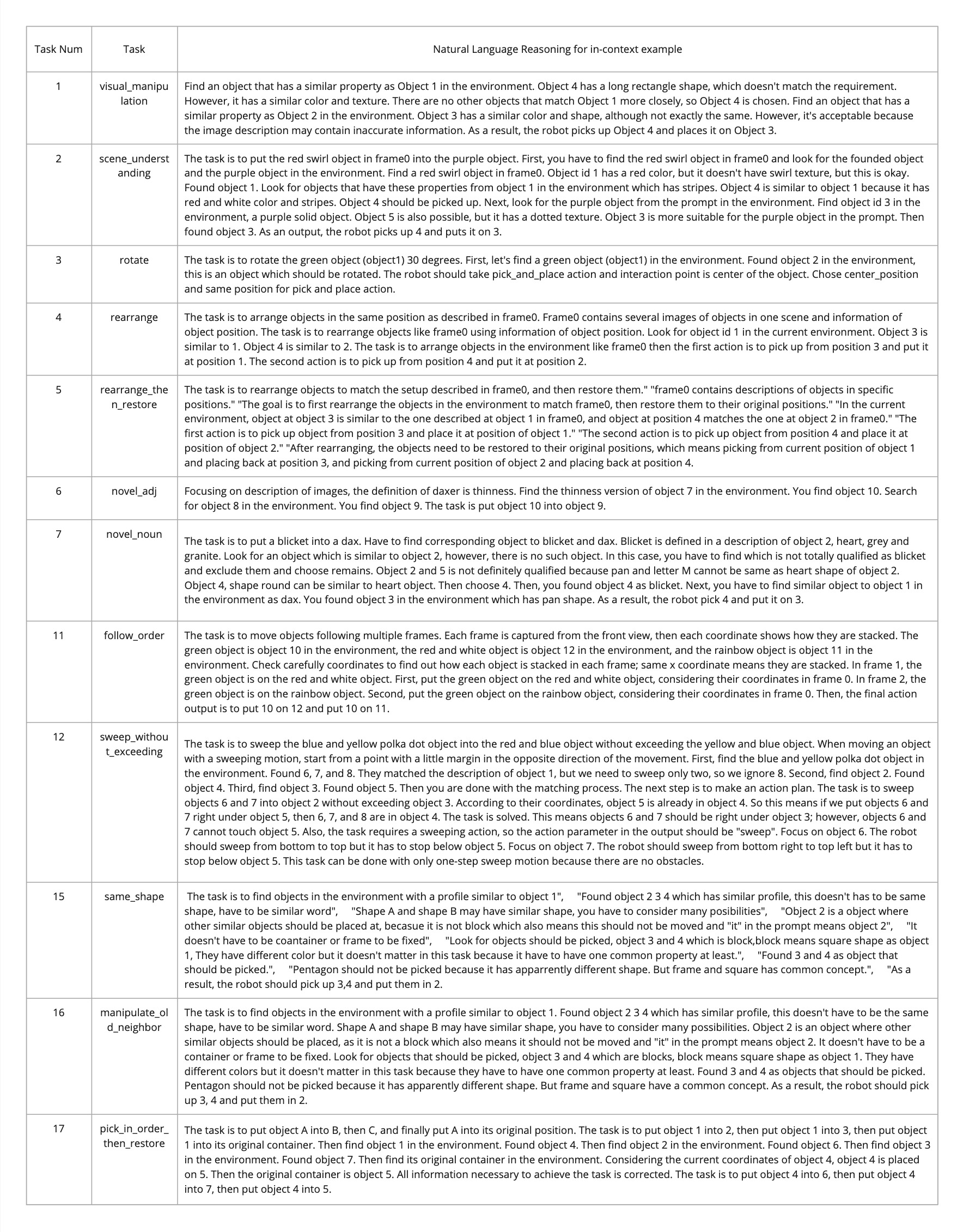

We provide one demonstration as an in-context example, and a planning step employing natural language reasoning instead of conventional code implementation. We remove the CoT reasoning component in the in-context example for our ablation study to check the importance of natural language reasoning. We use low-level API(pick-and-place or sweep) to control the robot arm. We present specific examples of natural language reasoning in Table.

Our appraoch does not output policy code, does not use pre-defined APIs for perception, and use only natural lanugage reasoning.

@misc{mikami2024natural,

title={Natural Language as Policies: Reasoning for Coordinate-Level Embodied Control with LLMs},

author={Yusuke Mikami and Andrew Melnik and Jun Miura and Ville Hautamäki},

year={2024},

eprint={2403.13801},

archivePrefix={arXiv},

primaryClass={cs.RO}

}